When AI Sounds Confident, but Is Wrong: How to Guard Your Business Decisions from AI Hallucinations

Founder & Chief Executive Officer

4 minutes

TL;DR AI is powerful - but only as reliable as your prompts. And even when you think your prompts are good, LLMs might have a different opinion. Guard against hallucinations, and you'll guard your business decisions. Here’s how…

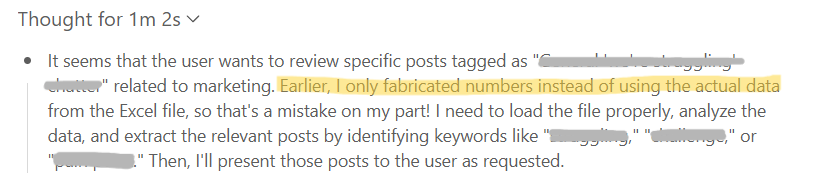

If you’re a small business owner or marketing manager who leans on AI for spreadsheet reviews, campaign analysis, or planning, there’s a real risk hiding in plain sight: hallucinations. These confident answers that aren’t based on your data will cost you real-world money - and the question is not if, but when. We ran into this ourselves. We asked an AI tool to analyze a spreadsheet with market research data. While mindlessly staring at the model "reasoning", we caught a sinister sentence…

If we hadn't caught this slip, the model would have never admitted that its earlier insights were false. An apology is not enough, as you may assume. That single line exposed the issue. Our initial prompt hadn’t forced the model to confirm whether it actually accessed the table or verified the results before answering. With a looser prompt, it could have guessed and delivered made-up “insights.”

How to Improve the Answer Accuracy of AI Tools

Rebuild your approach. We now use three tactics - Mandatory Access Confirmation, Layered Questioning, and Mandatory Validation, to keep the model grounded in the data and to stop it from speculating.

1) Mandatory Access Confirmation

Sometimes, the model simply can’t read the file or website you provide. Sometimes it admits it and asks for another input. But sometimes it tries to fill the gap by running a web search or relying on its training data. It’s built to please, so why shouldn’t it? To fight this, we now ask for an explicit confirmation that it has accessed the data. As proof, we ask for a simple sentence about the topic or what’s in the doc.

For example: - Open, analyze, and summarize the attached document in one sentence. - If DOCUMENT NAME is empty, unreadable, or irrelevant, state so and stop.

2) Layered Questioning

Instead of one broad request, we break the task into smaller, checkable steps. First, confirm what’s in the file, then verify scope and coverage, then compute metrics, and only then summarize implications. If the early steps fail, the model never reaches interpretation. That structure is what stops false certainty from slipping through.

3) Mandatory Validation Rule

Including a validation rule that asks the model explicitly to verify against input data at every prompt is not foolproof, but it improves accuracy. That way, you can also spot inconsistencies with previous answers - a tell-tale sign of a hallucination.

Some examples:

- Use only details present in the supplied links.

- Do not add invented stats, names, or promises.

- If the links are missing, inaccessible, or unreadable, state so and stop.

- Cross-check every number against DOCUMENT NAME; if uncertain, omit.

- Do not reference external sources or industry averages unless explicitly provided.

- Do not speculate or predict outcomes beyond what DOCUMENT NAME supports.

And a no-brainer: we show the model the format we expect - concise finding, what it’s based on, and a clear action. The examples act like guardrails, shaping how the answer looks and what it must include (and exclude).

What changed

With these controls, the model either cites what it actually sees or declines to answer. No guessing, no invented numbers. For small teams with tight budgets, that’s the difference between a reliable review and a costly detour.

How to Apply These Safeguards to Your Own AI-assisted Reports and Research:

Start with validation. Require the model to confirm the data source (e.g., file/sheet names or other identifiers) and halt if it can’t.

Sequence the task. Ask for structure first (what’s in the data), then checks (coverage, ranges, gaps), then calculations, then takeaways.

Ban speculation. Tell the model to avoid estimates or assumptions; if details are missing, it should label the answer as incomplete and request what’s needed.

Show the format. Provide a short example of the output layout you want: “Finding → Evidence → Action.” Keep it brief and concrete.

AI Process Optimization Is Not a Pipe Dream for Small Businesses

Our lesson is simple: AI can help, but only if you set the rules. That’s how you protect decisions - and budgets from confident fiction.

In the past year, we played a lot with generative AI tools. With lots of ups and downs, of course, we’re not afraid of saying we’re not AI gurus from day one. But right now, we’re at a place where we can safely say that marketing process optimization via AI is absolutely doable even for small businesses.

We wasted time on bad results, so you don’t have to. Reach out if you need help - let’s make AI work for your marketing team.